It's time for another NOWHERE tech write-up. I've been tweeting about my work on Twitter up to the point where I was nudged to write a longer blog post about what the hell I'm actually doing, so this is an attempt at doing just this. A chronological description of my trials and tribulations and where I finally ended up.

The importance of tooling can not be overstated. There are no tools out there for the kind of deeply procedural game we're working on, and good tooling comprises 90% of what makes the game, as nearly all of our content is procedural in one way or another, and not handmade. If there's currently a lack of procedural content out there, it's precisely because of the lack of tooling.

So I set out to construct an IDE in which assembling procedures in a maintainable way became easier. Inspired by UE4's Blueprints, I began with graph based editing as a guide, as graphs make it relatively easy to describe procedural flow. As a warm-up, I wrote

two tiny C libraries: a theming library based on Blender's UI style, and a low level semi-immediate UI library that covers the task of layouting and processing widget trees.

The IDE, dubbed

Noodles, was written on top of the fabled 500k big

LuaJIT. The result looked like this:

|

| Demonstrating compaction of node selections into subnodes, ad absurdum ;-) |

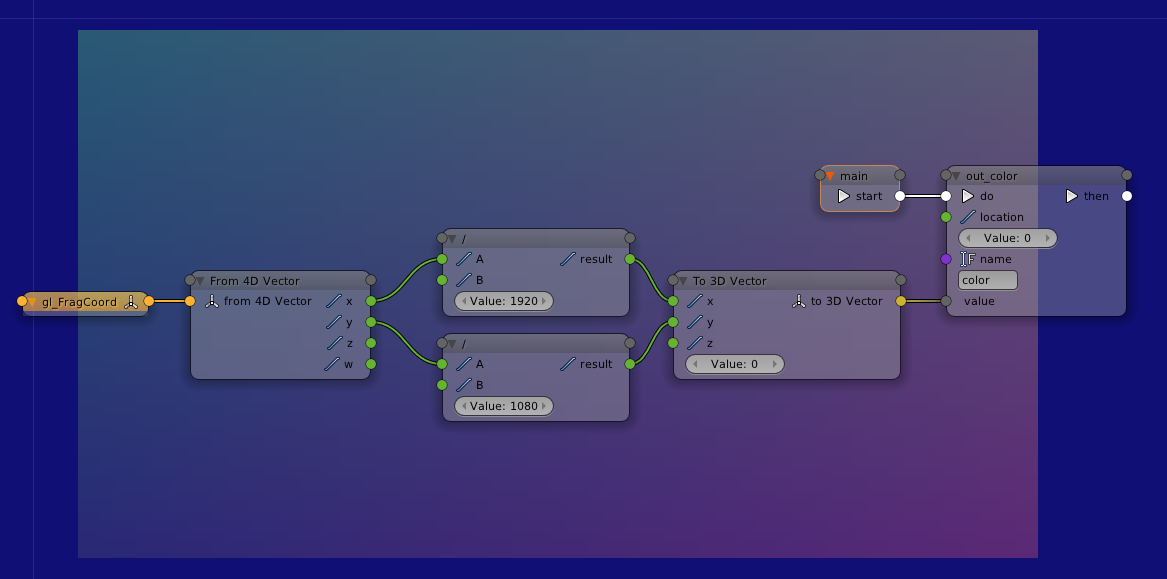

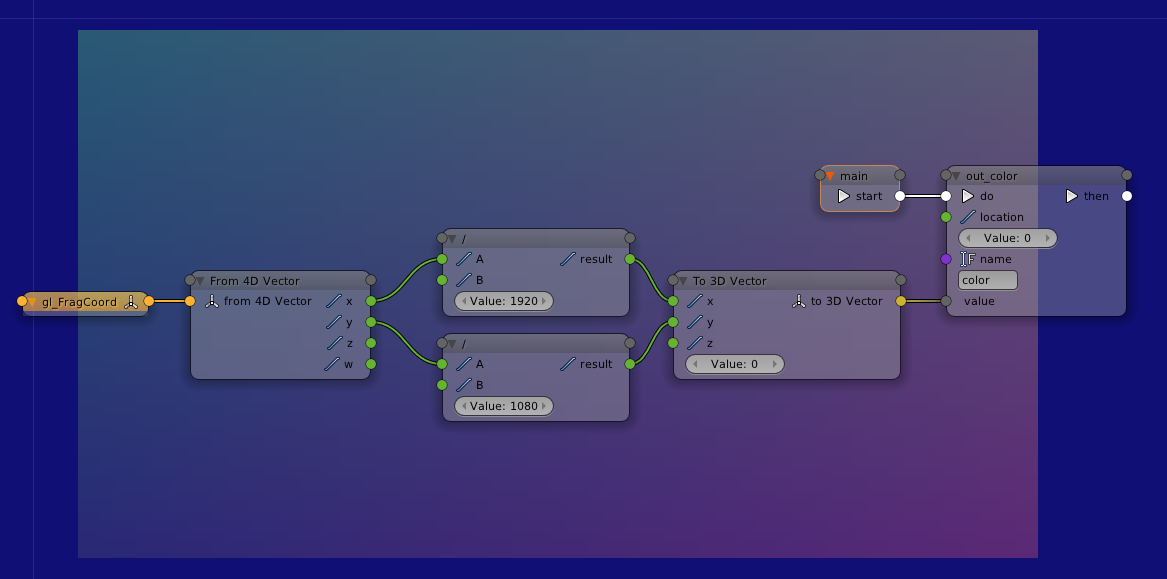

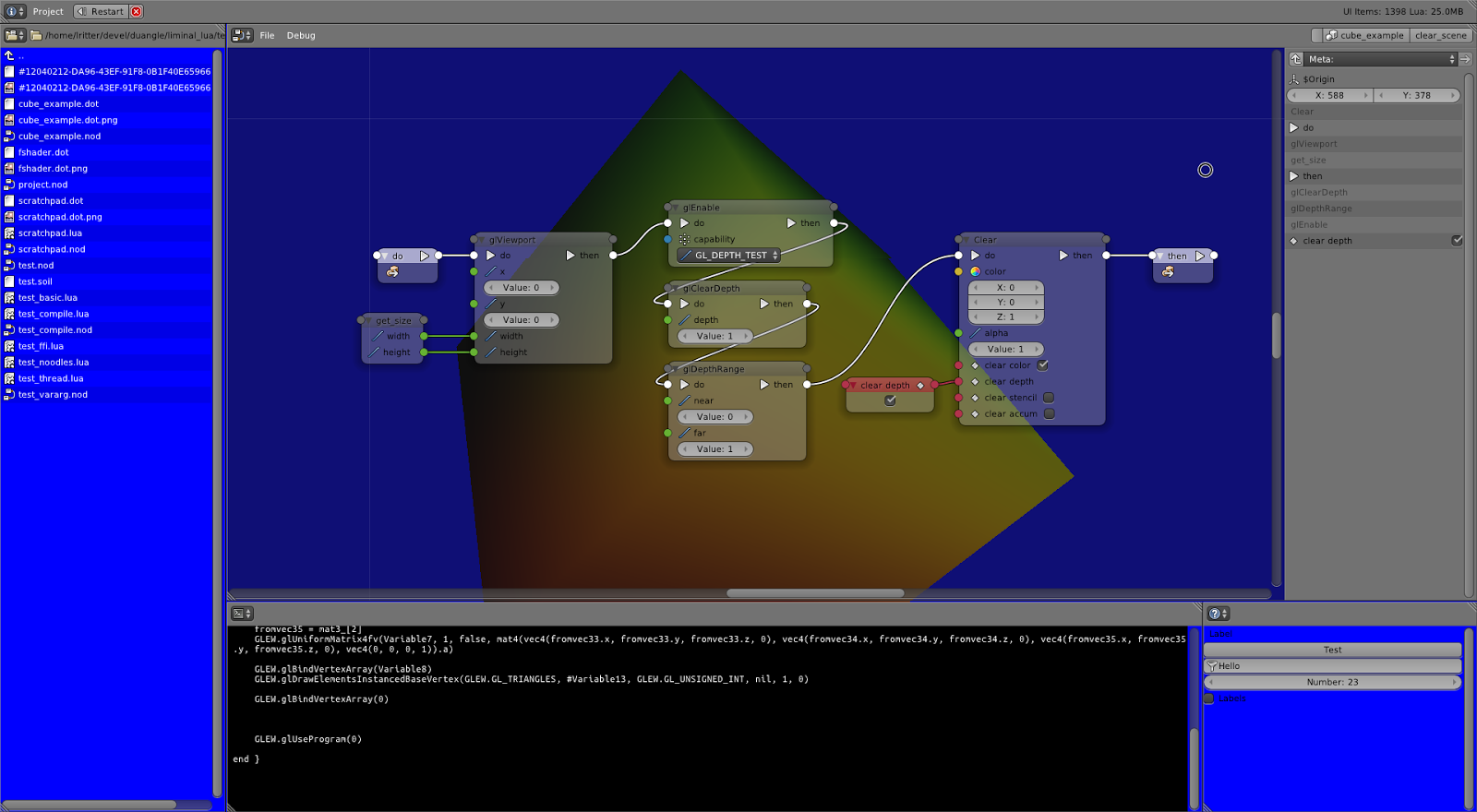

A back-end compiler would translate these graph nodes back to Lua code to keep execution reasonably efficient, and I added support for GLSL code generation, something I've been planning to do from the beginning. I found that the ability to cover different targets (dynamic programming, static CPU, GPU pipelines) with a single interface paradigm became somewhat of a priority.

|

| A simple GLSL shader in nodes, with output visible in the background. |

The workflow was pretty neat for high level processing, but working with the mouse wasn't fast enough to construct low level code from scratch - refactoring was way easier though.

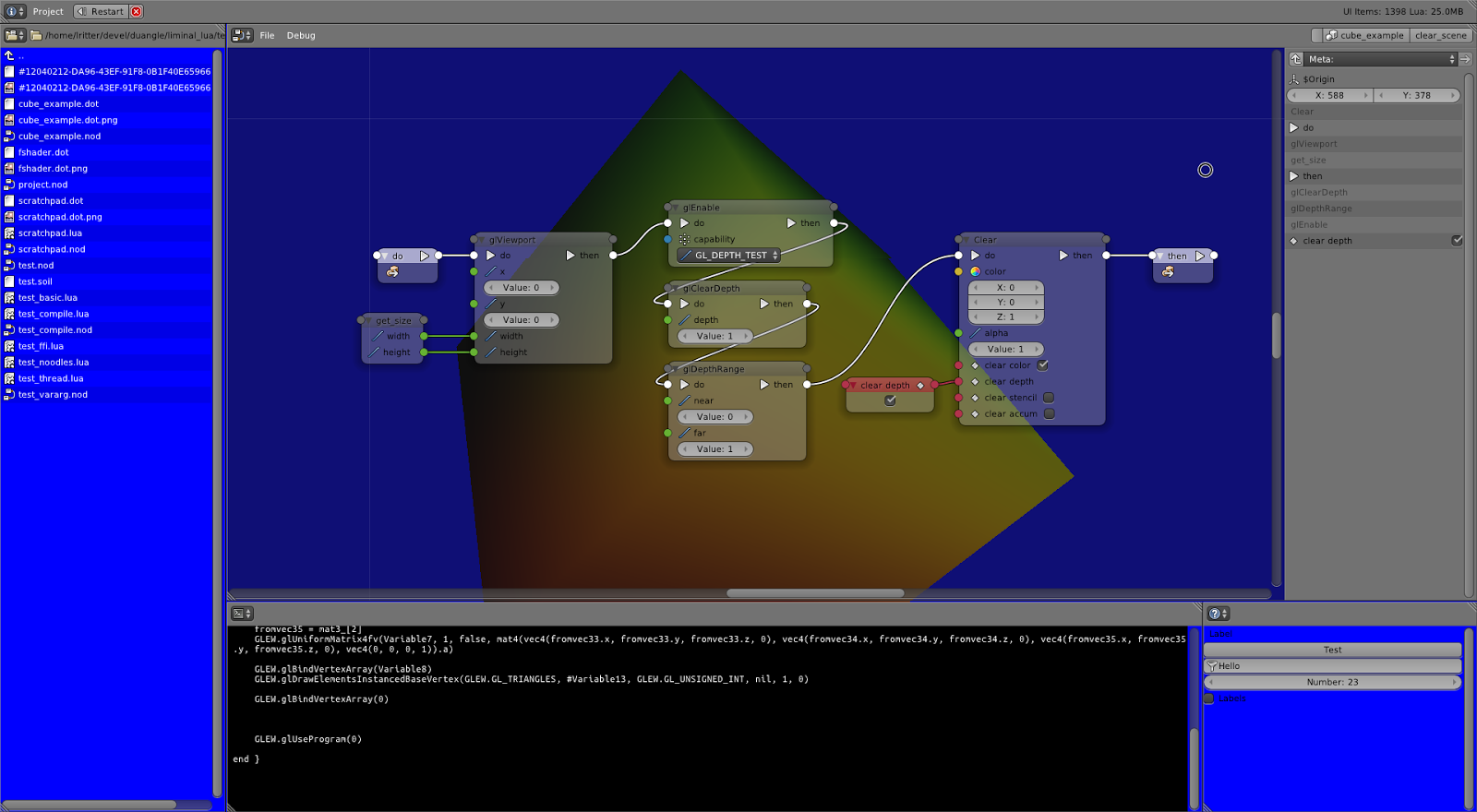

|

| Noodles, shortly before I simplified the concept. Live-editing the OpenGL code for a cube rotating in the background. |

I still didn't have much of an idea what the semantics of programming with nodes were going to be. I felt that the system should be able to analyze and modify itself, but a few design issues cropped up. The existing data model was already three times more complex than it needed to be. The file format was kept in text form to make diffing possible, the clipboard also dealt with nodes in text form, but the structure was too bloated to make manual editing feasible. The fundament was too big, and it had to become lighter before I felt ready to work on more advanced features.

At this point, I didn't know much about building languages and compilers. I researched what kind of existing programming languages were structurally compatible with noodles, and data flow programming in general. They should be completely data flow oriented, therefore of a functional nature. The AST must be simple enough to make runtime analysis and generation of code possible. The system must work without a graphical representation, and be ubiquitous enough to retarget it for many different domain specific graphs.

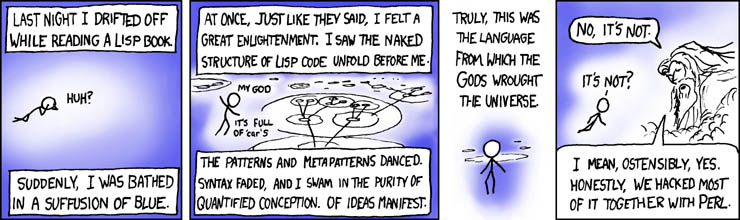

It turned out the answer had been there all along. Since 1958, to be exact.

Or 1984, if we start with

SICP. Apparently everyone but me has been in CS courses, and knows this book and the fabled eval-apply duality. I never got in contact with Lisp or Scheme early on, something that I would consider my biggest mistake in my professional career. There are two XKCD comics that are relevant to my discovery here:

Did you know the first application of Lisp was AI programming? A language that consists almost exclusively out of procedures instead of data structures. I had the intuitive feeling that I had found exactly the right level of abstraction for the kind of problems we are and will be dealing with in our game.

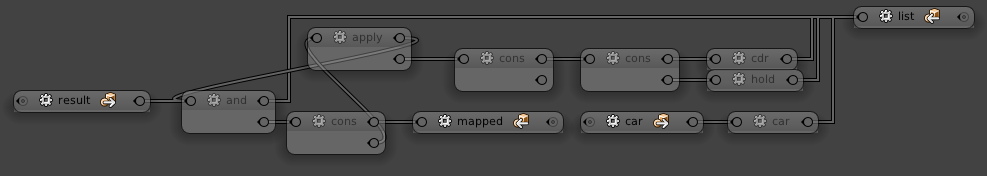

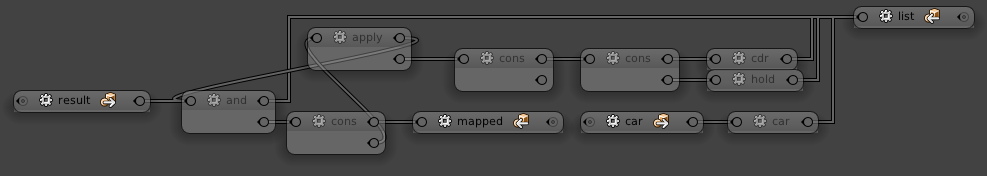

My first step was changing the computational model to a simple tree-based processing of nodes. Here's the flow graph for a fold/reduce function:

|

| Disassemble a list, route out processing to another function, then reassemble the list |

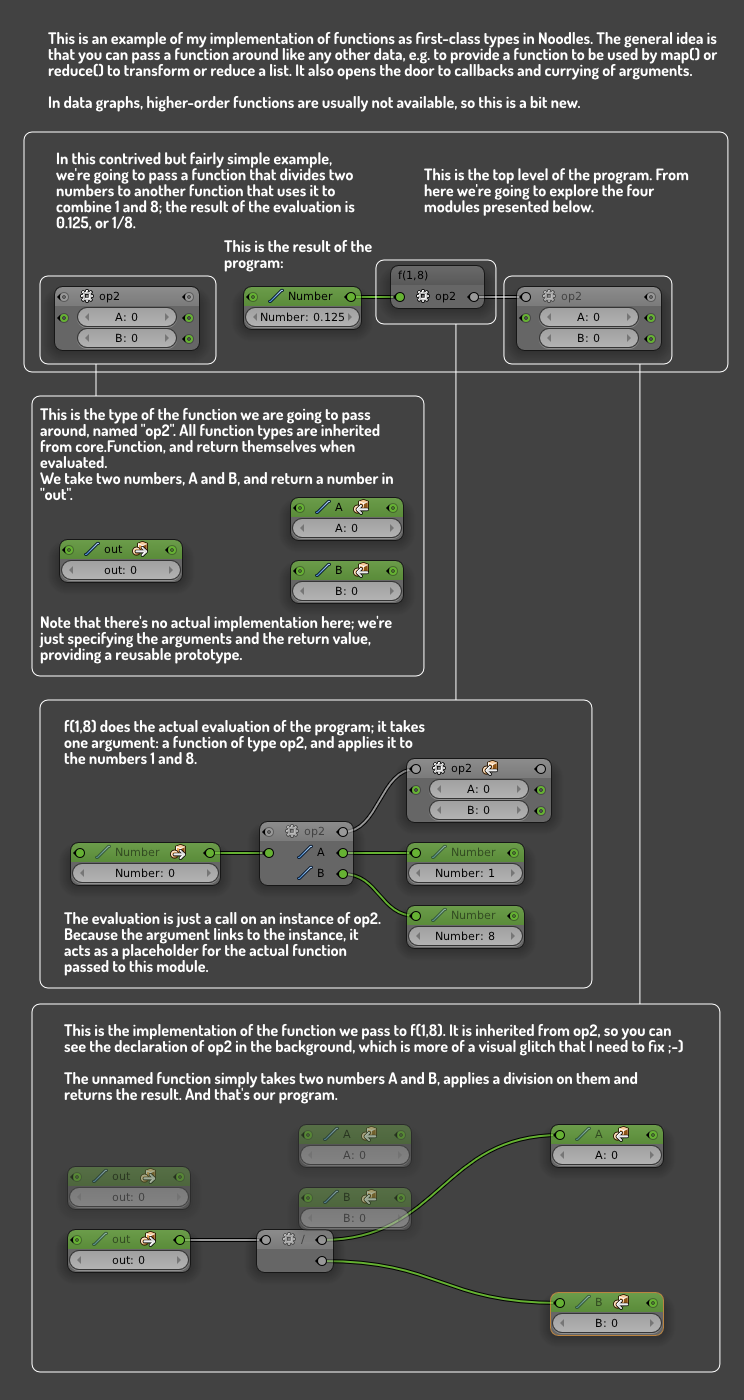

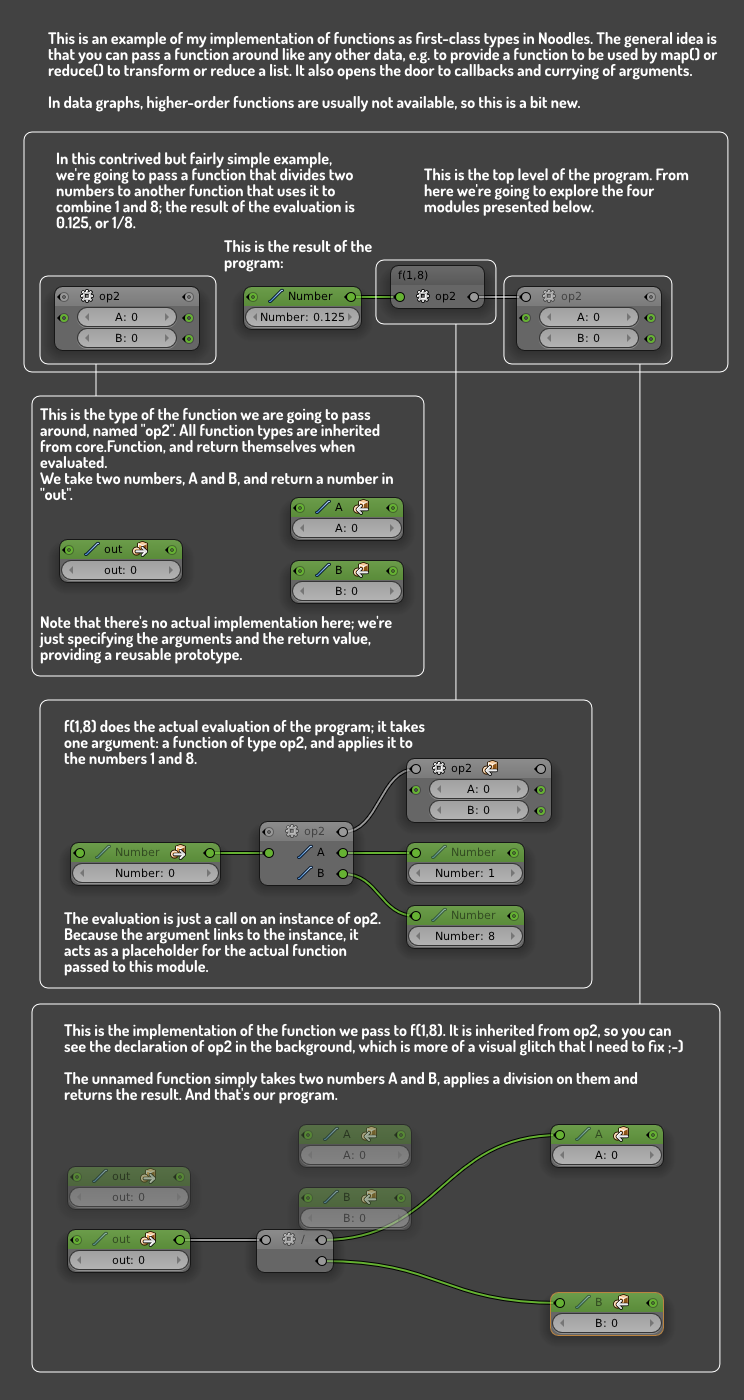

I figured out a way to do first-order functions in a graph, and did a little demonstrative graphic about it.

|

| Click for a bigger picture |

While these representations are informative to look at, they're neither particulary dense nor easy to construct, even with an auto-complete context box at your disposal. You're also required to manually layout the tree as you build it; while relaxing, this necessity is not particularly productive.

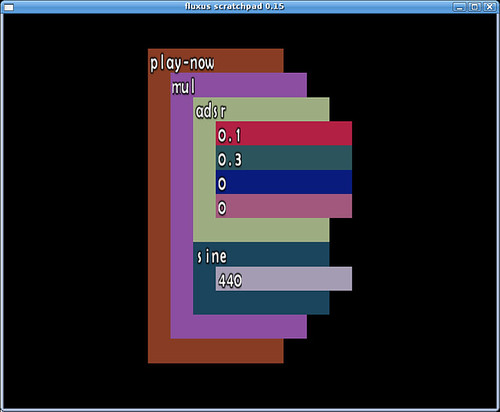

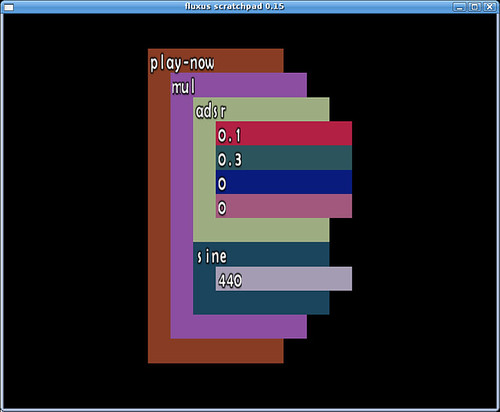

It became clear that the graph could be compacted where relationships were trivial (that is: tree-like), in the way

Scheme Bricks does it:

|

| Not beautiful, but an interesting way to compact the tree |

And then it hit me: what if the editor was built from the grounds up with Lispy principles: the simplest graphically based visualization possible, extensible from within the editor, so that the editor would factually become an

editor-editor, an idea I've been pursuing in library projects like

Datenwerk and

Soil. Work on Noodles ended and Noodles was salvaged for parts to put into the next editor, named

Conspire.

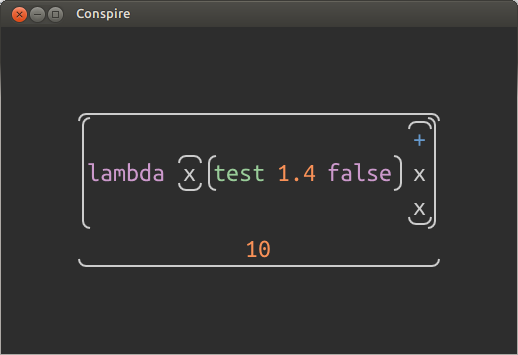

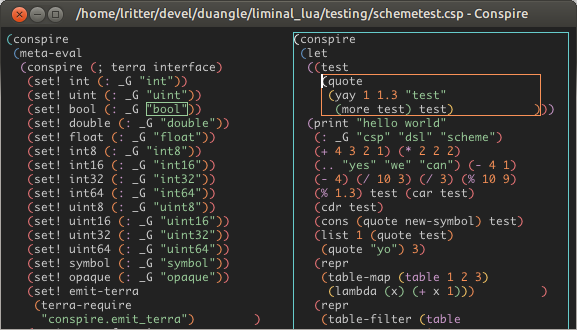

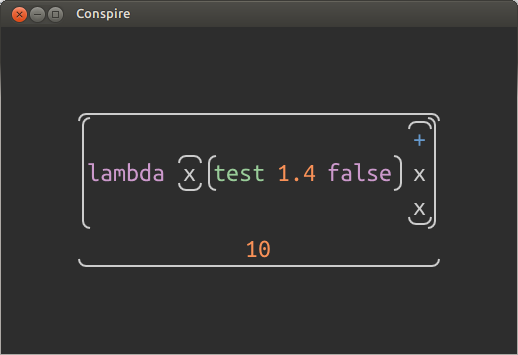

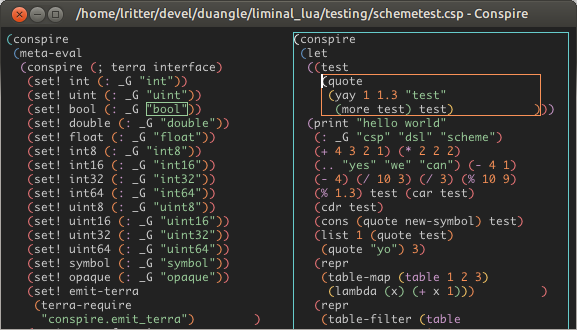

|

| A very early screenshot. Atoms are rendered as widgets, lists are turned into layout containers with varying orientation. |

At its heart, Conspire is a minimal single-document editor for a simplified

S-expression tree that only knows four

immutable data types: lists (implemented as Lua tables), symbols (mapped to Lua strings) , strings (a Lua string with a prefix to distinguish it from symbols) and numbers (mapped to the native Lua datatype).

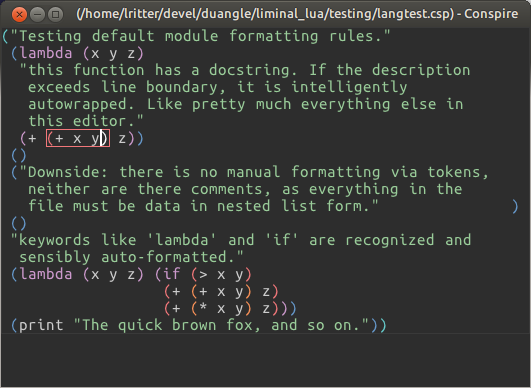

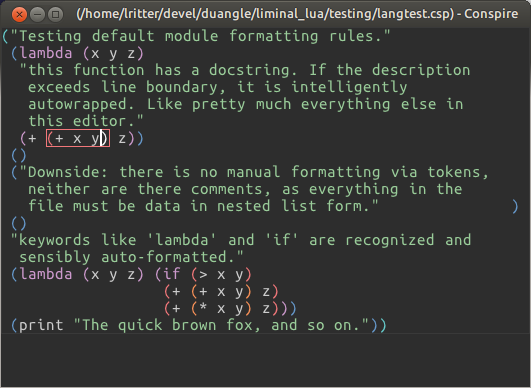

By default, Conspire maps editing to a typical text editing workflow with an undo/redo stack and all the familiar shortcuts, but the data only exists as text when saved to disk. The

model is an AST tree; the

view is one of a text editor.

|

| Rainbow parentheses make editing and reading nested structures easier. |

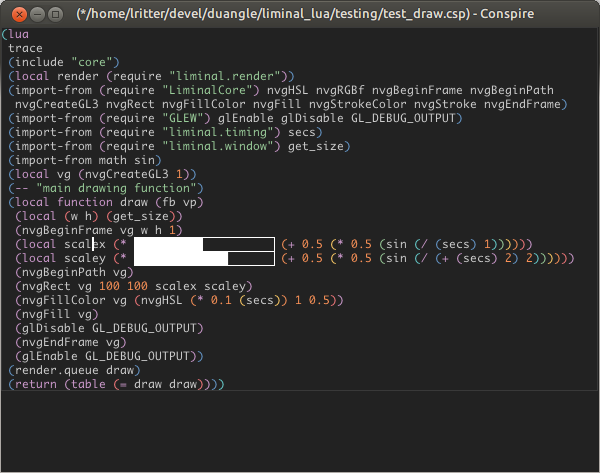

Conspire can be extended to recognize certain expressions in the same way

(define-syntax) works in Scheme, and style these expressions to display different controls or data:

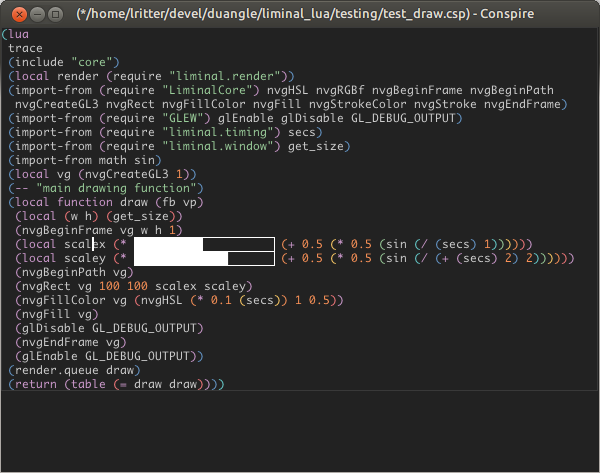

|

| A numerical expression is rendered as a dragable slider that alters the wrapped number. |

In the example above, the expression

(ui-slider (:step 100 :range (0 1000) <value>) is rendered as a slider widget that, when dragged with the mouse, alters the <value> slot in the AST tree the view represents. The operations are committed to the undo buffer the same way any other editing operation would.

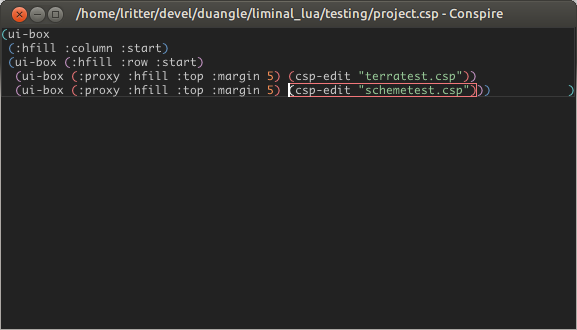

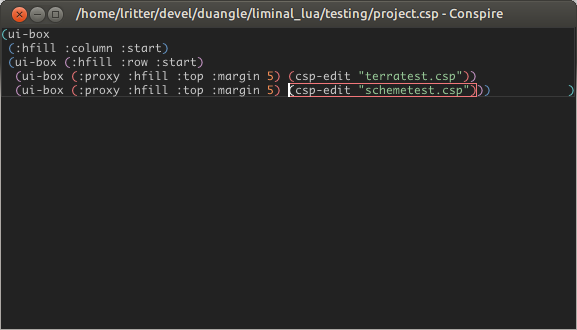

Using this principle, the editor can be gradually expanded with more editing features. One of the first things that I added was the ability to nest editors within each other. The editor's root document then acts as the first and fundamental view, bootstrapping all other contexts into place:

|

| The root document with unstyled markup. csp-edit declares a new nested editor. |

Hitting the F2 key, which toggles styling, we immediately get the interpreted version of the markup document above. The referenced documents are loaded into the sub-editors, with their own undo stack:

|

| Tab and Shift+Tab switch between documents. |

New views and controllers such as a Noodles-like graph editor could be implemented as additional AST transformers, allowing the user to shape the editor into whatever it needs to be for the tasks at hand, which in our case will be game programming.

The idea here is that language and editor become a harmoniously inter-operating unit. I'm comparing Conspire to a headless web browser where HTML, CSS, Javascript have all been replaced with their S-Expression-based equivalents, so all syntax trees are compatible to each other.

I've recently integrated the

Terra low-level extensions for Lua and am now working on a way to seamlessly mix interpreted code with LLVM compiled instructions so the graphics pipeline can completely run through Conspire, be scripted even while it is running and yet keep a C-like performance level. Without the wonders of Scheme, all these ideas would have been unthinkable for the timeframe we're covering.

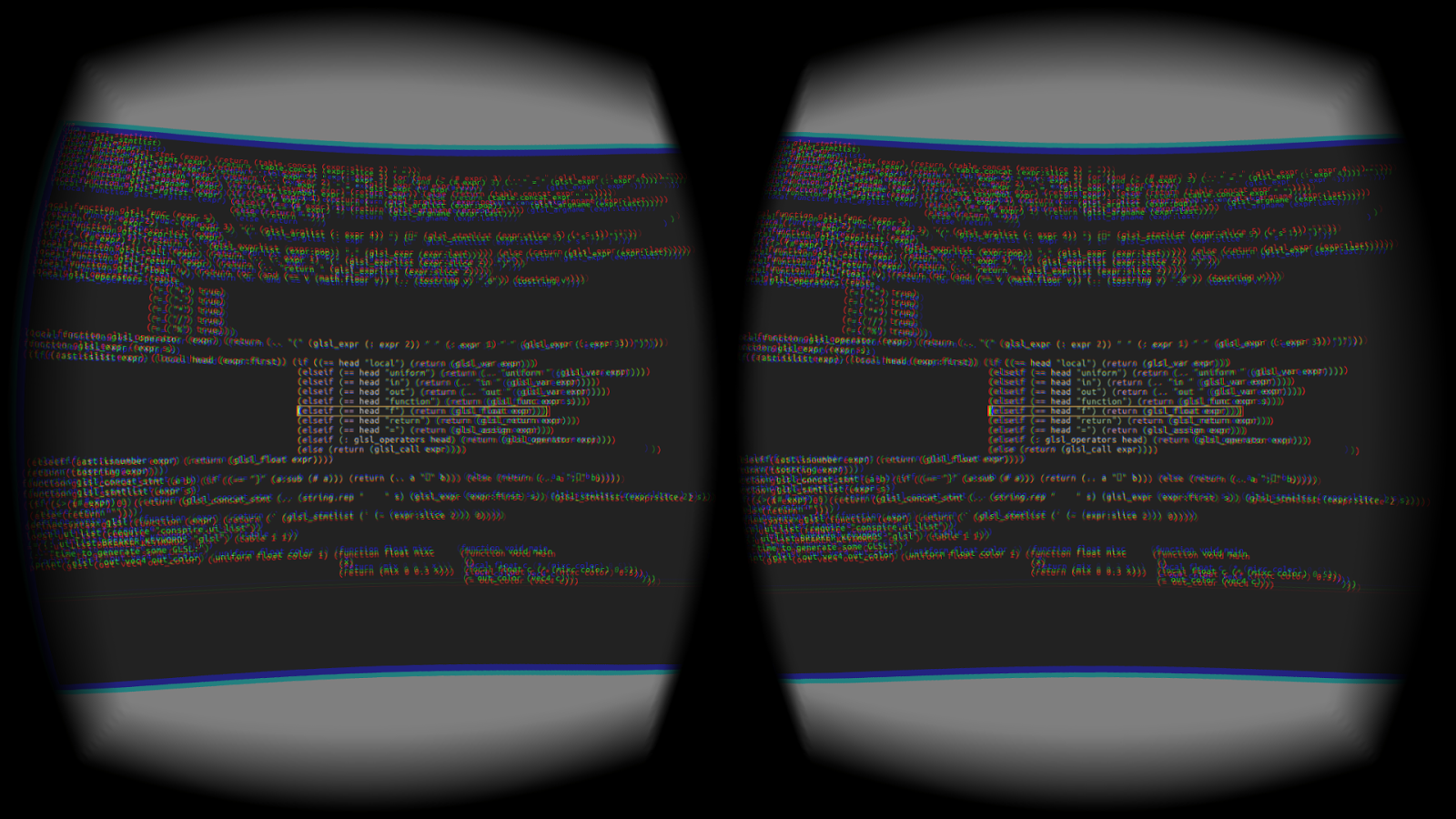

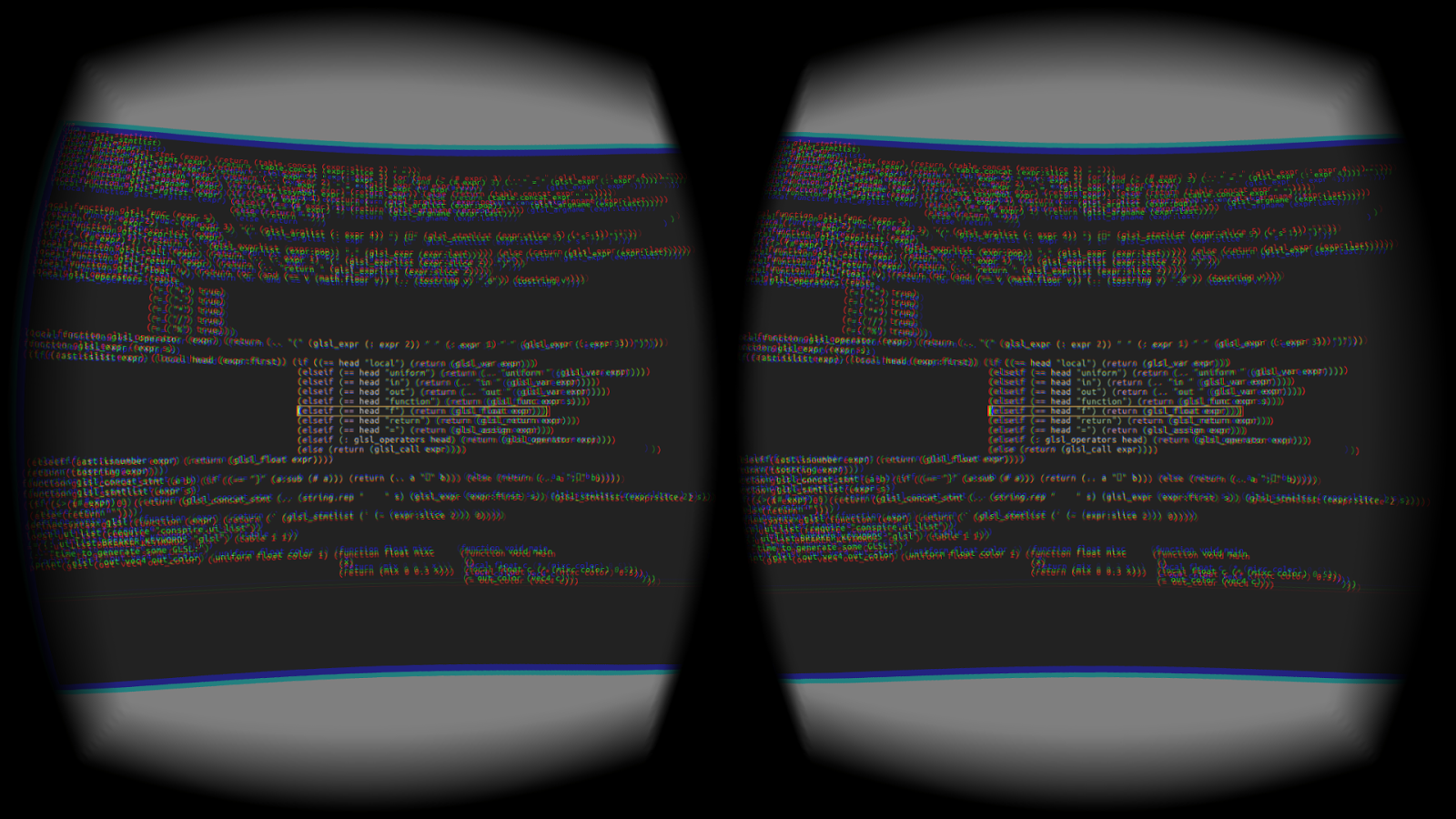

Oh, and there's of course one more advantage of writing your editor from scratch: it runs in a virtual reality environment out of the box.

|

| Conspire running on a virtual hemispheric screen on the Oculus Rift DK2 |